Manuvir Das, Vice President of NVIDIA Enterprise, said in an interview, "The saleable commercial product is GPUs, and software is designed to help people use GPUs in different ways. Of course, we still did that. But what really changed is that we now have a commercial software business." Das said that NVIDIA's new software will make it easier to run programs on any GPU of NVIDIA, even old GPUs that may be more suitable for deployment but not for building artificial intelligence. "If you are a developer and have an interesting model, you want people to adopt it. If you put it in NIM, we will ensure that it runs on all of our GPUs," Das said.

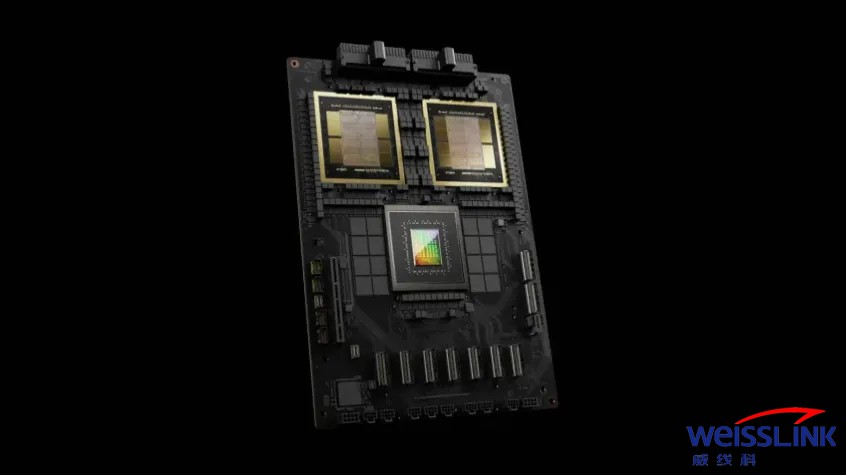

Nvidia updates its GPU architecture every year to achieve a significant improvement in GPU performance. Many artificial intelligence models released in the past year were trained on the Hopper architecture announced by the company in 2022 and used for chips such as H100. Nvidia stated that processors based on the Blackwell architecture, such as the GB200, provide significant performance upgrades for artificial intelligence companies, achieving AI performance of 20 petaflops or 2 trillion calculations per second, which is five times higher than the H100. Nvidia stated that the additional computing power will enable artificial intelligence companies to train larger and more complex models, and the performance of reasoning large language models will be improved by 30 times compared to H100.

Blackwell GPU integrates two independently manufactured chips into one chip manufactured by TSMC. Nvidia also launched a server called GB200 NVLink 2, which combines 72 Blackwell GPUs and other Nvidia accessories designed to train artificial intelligence models.

Amazon, Google, Microsoft, and Oracle will sell access to GB200 through cloud services. GB200 pairs two B200 Blackwell GPUs with an Arm based Grace CPU. Nvidia stated that Amazon Cloud Services AWS will establish a server cluster with 20000 GB200 chips.

Nvidia also stated that the system can deploy models with 27 trillion parameters, which is much larger than the current largest model, such as the GPT-4 with 1.7 trillion parameters. Many artificial intelligence researchers believe that larger models with more parameters and data may unleash new capabilities. Nvidia did not provide the cost of the new GB200 or its systems. According to analysts' estimates, the cost of Nvidia's Hopper based H100 chip ranges from $25000 to $40000, with the entire system costing up to $200000.

NIM software

Nvidia also announced that it will add a new product called NIM to its Nvidia Enterprise Software subscription. NIM makes it easier to perform calculations or run AI software using old NVIDIA GPUs, and allows businesses to continue using their existing hundreds of millions of NVIDIA GPUs. Compared to the initial training of the new artificial intelligence model, using NIM for inference requires less computational power. NIM enables companies that wish to run their own artificial intelligence models to achieve this goal by no longer purchasing services to access AI results from companies such as OpenAI.

Nvidia's strategy is to have customers who purchase Nvidia based servers register for Nvidia AI Enterprise services, with an annual licensing fee of $4500 per GPU. Nvidia will collaborate with artificial intelligence companies such as Microsoft or Hugging Face to ensure that their AI models are adjusted to run on all compatible Nvidia chips. Using NIM, developers can efficiently run models on their own servers or cloud based Nvidia servers without the need for lengthy configuration processes. Nvidia also stated that the software will help artificial intelligence run on laptops equipped with GPUs, rather than on cloud servers.